What is a search engine spider and seo spider, What is crawl meaning? and what does google spider does and how it works and index pages. SEO Spider Definition, explaination, And usage.

Search Engine Spider

SEO Spider Definition

It's Known As Spider, Web Spider, SEO Spider, Search Engine Spider, And also known as Crawler, Web Crawler, Crawling...etc.

SEO Spider Explaination

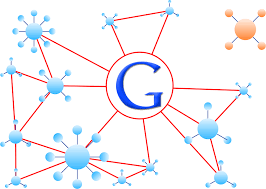

A Search engine spider is that program / automated script which browses the World Wide Web in a methodical way form and automated manner. And this process is usually called a Web crawling, crawling, web crawling or Spidering.

Search Engine Spider Usage

Lot of websites are use Web Crawling as a means of providing up-to-date data. A Spider is mainly used to create a copy of all website pages for the process of a search engine to be archived and indexed, The index process is the downloading process for pages to provide fast searches.

Search engine spider can also be used for automating & maintenance tasks on a Website, Like checking links or validating The code. Also, Search engine spider can be used to gather several types of information from Web pages, such as collecting e-mail addresses (Sometimes for spamming proccess).

Crawl Meaning

The crawl meaning is a definition of a program that visits Web sites and reads the pages and save the information in order to create entries and queries for the search engines process of indexing website.

The common search engines (Such as Google, Bing, Yandex..etc) on the Web are having this program, which is also known as a "spider" or a "crawler".

They are typically programmed to visit sites, download content, archive queries that have been submitted by their owners as new or updated. When a user searches for a keyword, The search engines gives relevant content related to the requested keyword.

Related content: What is crawling?

Google Spider

Google spider is the bot coming from Google, How does Google Spider work? Google is getting information from many different sources, such as:

- The Web pages.

- the content user added such as Google My Business and Maps user submissions.

- With Book scanning.

- The open source and public databases on the Internet.

- Any open source resource.

Google follows basic steps to generate the results from web pages:

- Indexing

- Serving (and ranking)

Notice: The Spiderbot is the same as crawlerbot.

0 Comments

Post your comment