What is crawl process or crawling and what is a web crawler and the Googlebot usage. What are search engine crawlers and what is their importance to the WWW and websites all around the internet? You can read more about that here in this post.

Web Crawler

It's defined as Web Crawler , Crawl , Web crawling , and search engine crawlers. Such as: Googlebot. Also Known as spider or spiderbot but it's shortened to crawler.

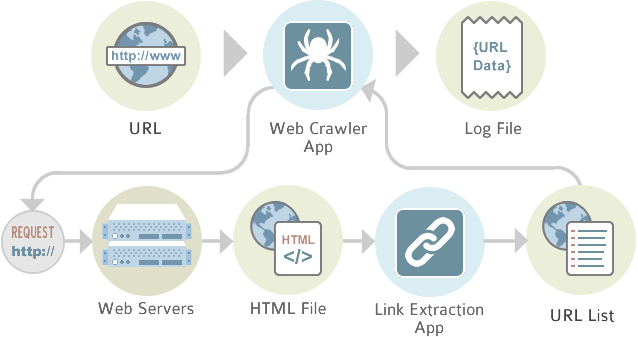

The Web Crawler is that systematically browses the World Wide Web (WWW), typically for the purpose of Website indexing to the search engines ( Crawling ).

About Search Engine Crawlers

GoogleBot

Search engine crawlers is the way to for a software to update their website content or pages to the search engines. Web crawler ( Such as Googlebot ) makes a process to copy pages for processing by a search engine which indexes the downloaded pages, so all search engines users can search more efficiently.

Web Crawler is consuming resources on visited websites and often visit sites without approval. Issues of schedule, load, and the politeness come into the scene when large collections of pages were accessed.

The Mechanisms that is exist for public websites not wishing to be crawled to make this known to the crawling agent. Such As, including a robots.txt file that can request Googlebot /bots to index only parts of a website, or nothing at all.

Importance of Crawl / Crawling

What is web crawling and web crawler good for?

There is huge number of internet web pages; even the largest web crawler fall short of making the complete index. For previous reason, Search engines are struggling to give relevant search results in the early years of the World Wide Web before 2000th. Nowadays, relevant results are given almost close and instantly.

Crawling policy

All the behaviors of a Web crawler is the outcome of some combinations of policies we can define them as:

- The selection policy That's states the pages for download,

- The re-visit policy that states when to check for changes for the pages,

- The politeness policy and it's how to avoid overloading Web sites.

- The parallelization policy is the states how to coordinating distributed web crawler.

{{comments.length}} Comments

{{comment.name}} · {{comment.created}}

{{sc.name}} · {{sc.created}}

Post your comment